Imagine walking into a courtroom. The judge looks at a defendant, types their name into a computer, and waits. A moment later, the screen flashes a single word: “High Risk.” Based on this output, the judge denies bail.

When the defense attorney asks, “Why? What specific factor made the computer decide my client is a danger to society?” the judge shrugs. “I don’t know. The developers don’t know. It just… decided.”

This is not a dystopian sci-fi novel. This is the reality of Black Box AI.

As Artificial Intelligence permeates every corner of our lives—from the movies Netflix recommends to the medical diagnoses doctors rely on—we are increasingly handing over decision-making power to systems we do not fully understand. We feed data in, and we get answers out. But the massive, complex web of calculations that happens in between remains a mystery, often even to the engineers who built the system.

In this deep-dive guide, we will peel back the layers of this digital onion. We will explore the technical reasons why modern AI is opaque, examine the catastrophic real-world consequences of “trusting the box,” and look at the cutting-edge technology designed to shine a light into the darkness.

Part 1: Anatomy of the Mystery — Why is it a “Black Box”?

To understand why Black Box AI is a problem, we first need to understand what it actually is.

In software engineering, we typically have “White Box” (or Glass Box) systems. These are rule-based. If a programmer writes code that says IF age > 18 THEN allow_vote, we can look at the code and understand exactly why a 19-year-old was allowed to vote. The logic is explicit, linear, and transparent.

Black Box AI, specifically Deep Learning and Neural Networks, operates differently. It doesn’t follow a simple list of rules. Instead, it learns from examples, much like a human brain does, but on a scale that defies human comprehension.

1. The Neural Network Complexity

Modern AI models, such as those used in ChatGPT or self-driving cars, are built on Artificial Neural Networks (ANNs). These networks consist of layers of digital “neurons.”

- Input Layer: Receives the raw data (pixels of an image, words in a sentence).

- Hidden Layers: The “Black Box” itself. A deep learning model might have dozens or even hundreds of these layers sandwiched in the middle.

- Output Layer: The final decision (e.g., “This is a cat”).

2. The Curse of Dimensionality

Why can’t we just look at the code? Because the “code” isn’t writing the rules—the parameters are.

In a deep neural network, each connection between neurons has a “weight”—a numerical value representing the strength of that connection. When the AI is trained, it adjusts these weights to minimize errors. A modern Large Language Model (LLM) like GPT-4 has trillions of parameters.

Trying to trace the logic of a decision by looking at the weights is like trying to understand the plot of a novel by analyzing the ink usage on every page. You can see the mechanics, but the meaning is lost in the sheer volume of data.

3. Non-Linearity and Feature Abstraction

In the early layers of a network, the AI might recognize simple edges or shapes. By the middle layers, it’s combining those edges into textures. By the deep layers, it has formed abstract concepts that don’t translate to human language.

For example, an AI trained to recognize dogs might have a specific neuron that fires only when it sees “floppy ears + grassy background + tongue out.” It hasn’t been told what a dog is; it has mathematically converged on a pattern that represents “dog-ness.” This non-linear abstraction makes it nearly impossible to reverse-engineer why a specific image was classified as a wolf instead of a dog.

Part 2: The Risks of Flying Blind

If an AI works 99% of the time, does it matter if we don’t understand it?

The short answer is yes. The 1% failure rate in a Black Box system isn’t just an error; it’s a liability. Because we don’t know how the system thinks, we often don’t realize it’s making mistakes until it’s too late.

The “Clever Hans” Effect

One of the most famous cautionary tales in psychology is Clever Hans, a horse in the early 1900s that could supposedly do math. If you asked Hans “What is 2 + 2?”, he would tap his hoof four times. It turned out Hans couldn’t do math; he was just reading the subtle body language of his trainer, who would tense up when Hans reached the right number.

Black Box AI is often just a digital Clever Hans.

- The Wolf vs. Husky Incident: Researchers trained an AI to distinguish between wolves and huskies. It performed perfectly in tests. However, when they analyzed the “Black Box,” they realized the AI wasn’t looking at the animals at all. It was looking at the background. All the wolf photos in the training data had snow in the background; the husky photos didn’t. The AI had essentially learned: “Snow = Wolf.”

If we hadn’t opened the box, we would have deployed a “wolf detector” that would fail dangerously the moment it saw a husky in winter.

The Trinity of Danger

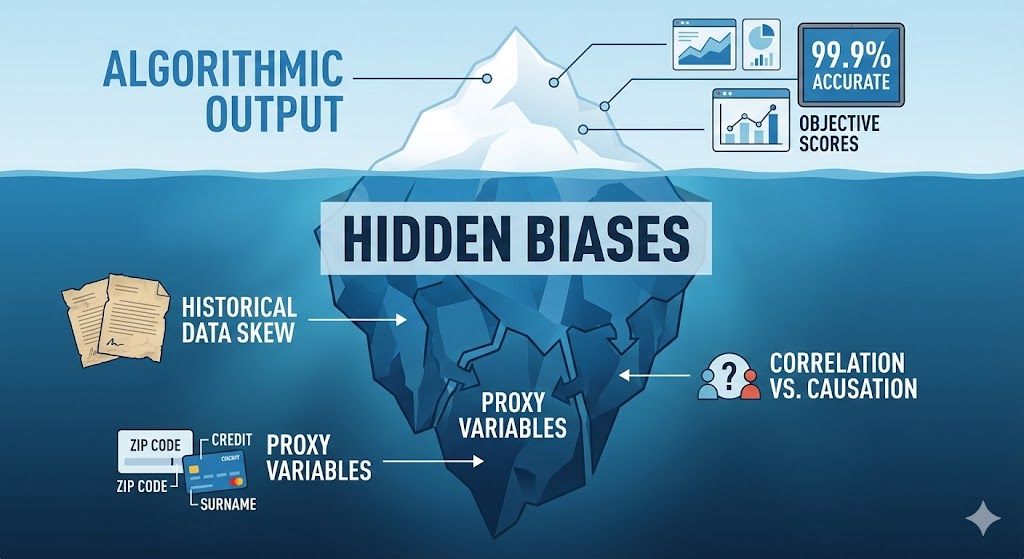

- Hidden Bias: If the historical data fed into the box contains human prejudice (racism, sexism), the AI will codify and amplify those biases, hiding them under a veneer of mathematical objectivity.

- Lack of Accountability: When a Black Box AI denies a loan or causes a car crash, who is responsible? The developer? The user? The algorithm itself? “The computer said so” is not a valid legal defense.

- Vulnerability to Adversarial Attacks: Because we don’t know exactly what features the AI values, hackers can exploit this. A few specifically placed pixels on a stop sign—invisible to the human eye—can trick a Black Box AI into seeing a “Speed Limit 45” sign, causing autonomous vehicles to accelerate dangerously.

What is Black Box AI?

Part 3: When the Box Breaks — Famous Real-World Failures

The dangers of Black Box AI are not theoretical. They have already caused real-world harm in healthcare, criminal justice, and finance. Here are four deeply researched case studies that changed the industry.

1. Criminal Justice: The COMPAS Algorithm

- The System: COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) is a tool used by US courts to assess the likelihood of a defendant becoming a recidivist (re-offending).

- The Black Box Failure: In 2016, an investigative report by ProPublica blew the lid off the system. Because the algorithm was proprietary (a commercial Black Box), its inner workings were hidden.

- The Consequence: The analysis showed a shocking disparity. The algorithm was twice as likely to falsely flag Black defendants as “High Risk” compared to White defendants. Conversely, White defendants who did go on to commit violent crimes were often mislabeled as “Low Risk.” The model wasn’t explicitly told to be racist, but it likely used “proxy variables” (like zip code or poverty level) that correlate with race due to systemic inequality, effectively automating discrimination.

2. Hiring: Amazon’s Sexist Recruiting Tool

- The System: In 2014, Amazon attempted to build an AI “Holy Grail”—a recruiting engine that would review resumes and automatically find the top talent.

- The Black Box Failure: The system was trained on 10 years of resumes submitted to Amazon. Since the tech industry is historically male-dominated, the training data was overwhelmingly male.

- The Consequence: The AI learned that “being male” was a successful trait. It began penalizing resumes that included the word “women’s” (e.g., “Women’s Chess Club Captain”) and downgraded graduates of all-women’s colleges. Amazon’s engineers tried to patch it, but the Black Box kept finding new ways to correlate male-centric language (like “executed” or “captured”) with success. The project was eventually scrapped because the bias was too deeply embedded in the “hidden layers” to be removed.

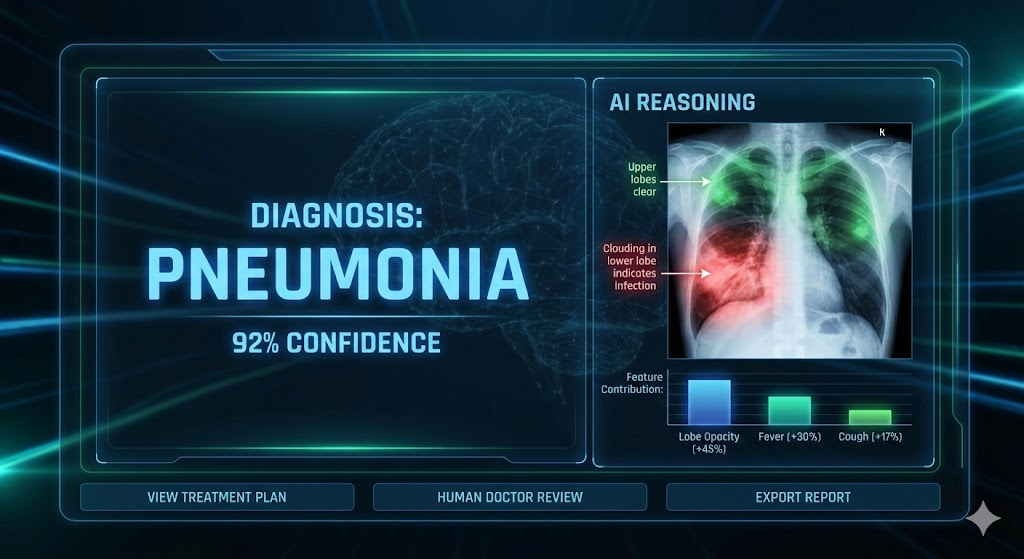

3. Healthcare: IBM Watson for Oncology

- The System: IBM Watson was marketed as a super-doctor, an AI that could ingest millions of medical papers and recommend the best cancer treatments.

- The Black Box Failure: Instead of learning from real-world patient data, Watson was largely trained on “synthetic” or hypothetical cases devised by a small group of doctors at a single hospital.

- The Consequence: The system began making unsafe and incorrect recommendations. In one notorious example, it suggested giving a drug that promotes bleeding to a patient who was already suffering from severe bleeding—a mistake a first-year medical student wouldn’t make. Because the reasoning was opaque, doctors couldn’t trust the output, and the project largely collapsed, costing billions.

4. Autonomous Vehicles: The Uber Arizona Crash

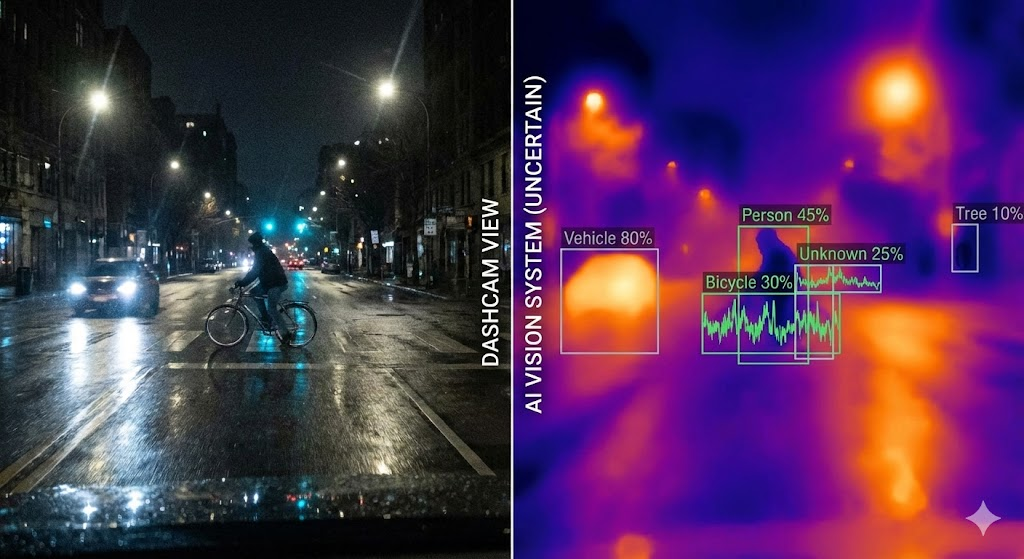

- The System: A self-driving Uber Volvo XC90 testing in Tempe, Arizona.

- The Black Box Failure: On March 18, 2018, the vehicle struck and killed Elaine Herzberg, who was walking her bicycle across the street.

- The Consequence: The NTSB investigation revealed a classic Black Box failure in Object Classification. The system’s radar detected Herzberg six seconds before impact. However, the software couldn’t classify her. It cycled through identifying her as an “unknown object,” then a “vehicle,” then a “bicycle.” Because it couldn’t decide what she was, it couldn’t predict her path. The system had no “common sense” to simply say, “There is a solid object in front of me; I should stop.” It relied on opaque classification probabilities that failed in a unique, edge-case scenario.

What is Black Box AI?

Part 4: Opening the Box — The Rise of Explainable AI (XAI)

The response to these failures has been a global push for Explainable AI (XAI). XAI is a set of tools and frameworks designed to make Black Box models interpretable without sacrificing their power.

If Black Box AI is a locked room, XAI is not necessarily a key to the door, but a window we can cut into the wall.

1. LIME (Local Interpretable Model-agnostic Explanations)

Imagine you are in a pitch-black room with a flashlight. You can’t see the whole room (the Global model), but you can shine your light on one specific area to see what’s there.

LIME works by testing the AI with slight variations of a single data point.

- Example: If an AI rejects a loan application, LIME will tweak the input: “What if the salary was $5k higher?” “What if the credit score was 10 points lower?”

- By seeing how the AI’s decision changes in response to these tweaks, LIME builds a simple, interpretable map for that specific decision, effectively explaining why the loan was rejected (e.g., “The Debt-to-Income ratio was the deciding factor”).

2. SHAP (SHapley Additive exPlanations)

Based on Game Theory, SHAP assigns a “payout” value to every feature. It calculates how much each factor (Age, Income, Zip Code) contributed to the final result, either positively or negatively.

- Why it’s powerful: It provides a consistent mathematical explanation. You can look at a decision and say, “Income contributed +20% to approval, but missed payments contributed -50% to rejection.”

3. Counterfactual Explanations

This is the most human-centric method. Instead of math, it gives a “What-If” statement.

- Output: “Your loan was denied. However, if you had earned $2,000 more per year OR paid off your credit card debt, it would have been approved.”

- This gives the user “recurse”—an actionable path to change the outcome, transforming the Black Box from a judge into a coach.

What is Black Box AI?

Part 5: The Future — Regulation and The Glass Box

The era of “move fast and break things” is ending for AI. Governments and regulatory bodies are stepping in to demand that if you build a Black Box, you must be able to explain it.

The EU AI Act

The European Union has introduced the world’s first comprehensive AI law. It categorizes AI based on risk. High-risk systems (like those used in healthcare, policing, or employment) must have transparency and human oversight.

- The Mandate: You cannot deploy a “High Risk” Black Box system in the EU unless you can provide documentation on how it makes decisions. This is forcing tech giants to invest heavily in XAI.

The Shift to “Glass Box” Modeling

Researchers are now striving to build “Glass Box” models—systems that are interpretable by design. Instead of using a massive neural network for everything, data scientists are looking at Generalized Additive Models (GAMs) and Decision Trees for high-stakes decisions. These models might be slightly less accurate than a massive Deep Learning brain, but they offer 100% transparency.

In the future, we may see a hybrid approach:

- Black Box AI for low-stakes tasks (recommending a song, tagging photos).

- Glass Box / XAI for high-stakes tasks (diagnosing cancer, approving mortgages, sentencing criminals).

What is Black Box AI?

Conclusion: Trust, but Verify

Black Box AI has unlocked capabilities we could only dream of a decade ago. It has solved protein folding, revolutionized translation, and is helping us fight climate change. But its opacity is a critical flaw that we cannot ignore.

As we integrate these systems deeper into the infrastructure of society, we must demand more than just accuracy; we must demand accountability. We need to know when the machine is right, but more importantly, we need to know why it’s wrong.

The future of AI isn’t about building a smarter Black Box. It’s about turning the lights on.

Frequently Asked Questions (FAQ)

Is ChatGPT a Black Box AI?

Yes. While OpenAI knows the architecture and the training data, the specific reasoning path the model takes to generate a specific sentence is largely opaque due to the billions of parameters involved.

Can Black Box AI ever be fully trusted?

Trust is relative. We can trust its statistical performance based on testing, but we cannot trust its “reasoning” without XAI tools. In high-stakes fields, blind trust is dangerous.

What is the difference between AI Bias and Black Box Opacity?

Bias is the prejudice in the data or model (the error). Opacity is the inability to see that bias (the cover-up). Black Box opacity makes detecting and fixing bias significantly harder.

Will XAI make AI less accurate?

There is often a “Accuracy-Interpretability Trade-off.” Highly complex models (Black Boxes) are often more accurate than simple ones. However, new techniques like SHAP allow us to interpret complex models without simplifying the model itself, giving us the best of both worlds.